15.Storage replication plugin

A storage replication service is a managed service in which stored or archived data is duplicated in real time. A storage replication service provides an extra measure of protection that can be invaluable if the main storage backup system fails. Immediate access to the replicated data minimizes downtime and its associated costs. The service, if properly implemented, can streamline disaster recovery processes by generating duplicate copies of all backed-up files on a continuous basis. It can also speed up and simplify recovery from a disaster such as a fire, flood, hurricane or a virus.

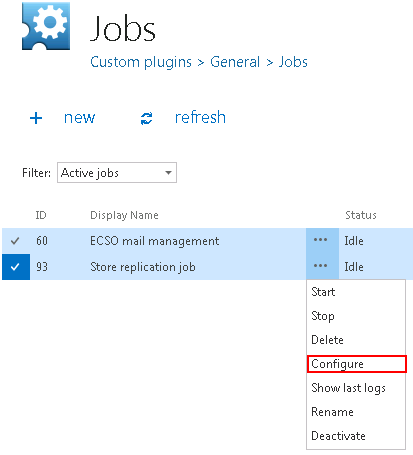

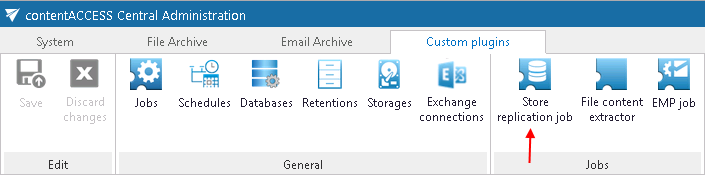

After you have successfully created a new job to Store replication job (for more information how to create a new job please refer to section Creating new jobs in contentACCESS) you can start to configure the storage replication job. Navigate to the Custom plugins ⇒ General ⇒ Jobs, choose the job from the list. Click on ellipses (…) and choose Configure option from the context menu.

✓ Database settings: For database settings select an existing connection from the dropdown list, or create a new one, where the job will save the metadata. (For further information how to set database connections please refer to section Databases)

✓ Scheduling settings: The store replication job will run in times, which is set in its selected scheduler. On the screenshot below we have selected the “Always” scheduler, which will run the job without interruption. For more information how to configure scheduler settings please refer to section Schedules described above.

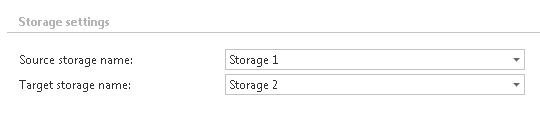

✓ Storage settings: Within these settings it is necessary to perform the settings of the source folder on the one hand and of the target folder(s) on the other hand. The source folder will be the primary folder. In case of any technical troubles the required documents will be recovered from the secondary (target) folder with the assistance of mapping data saved in contentACCESS.

✓ Resource settings: Under resource settings can be defined, how many items /source folders/ will be simultaneously crawled. It is not advisable to set here a value higher than the duplex number of cores of the processor as this will extremely slow down the whole processing.